Welcome to the homepage of the

Mathematical Optimization for Data Science Group

Department of Mathematics and Computer Science, Saarland University, Germany

|

Continuous Optimization |

|

Lecturer: Peter Ochs Summer Term 2024 Lecture (4h) and Tutorial (2h) 9 ECTS Lecture: Tuesday 8-10 c.t. in HS003, E1.3 Lecture: Thursday 8-10 c.t. in HS003, E1.3 Date of First Lecture: Tuesday, 16. April, 2024 Teaching Assistants: Tejas Natu Tutorials: by arrangement. Core Lecture for Mathematics and Computer Science Language: English Prerequisites: Basics of Mathematics (e.g. Linear Algebra 1-2, Analysis 1-3, Mathematics 1-3 for Computer Science) |

|

| ||||||

| News | ||||||

|

06.03.2024: Webpage is online. | ||||||

| Description | ||||||

|

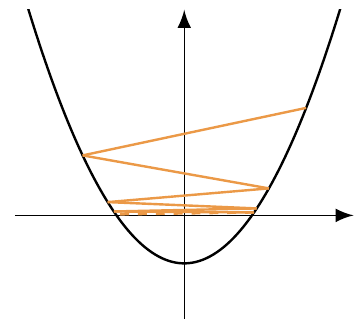

Optimization methods or algorithms seek for a state of a system that is optimal with respect to an objective function. Depending on the properties of the objective function, different strategies may be used to find such an optimal state (or point). The fundamental knowledge of the classes of functions that can be optimized, the properties of available optimization strategies, and the properties of the optimal points are crucial for appropriately modeling practical real world problems as optimization problems. An exact model of the real world that cannot be solved is as useless as a too simplistic model that can be solved easily. This lecture introduces the basic algorithms, and concepts and analysis tools for several fundamental classes of continuous optimization problems and algorithms. The lecture covers the basics of generic Descent Methods, Gradient Descent, Newton Method, Quasi-Newton Method, Gauss-Newton Method, Conjugate Gradient, linear programming, non-linear programming, as well as optimality conditions for unconstrained and constrained optimization problems. These may be considered as the classical topics of continuous optimization. Some of these methods will be implemented and explored for practical problems in the tutorials. After taking this course, students will have an overview of classical optimization methods and analysis tools for continuous optimization problems, which allows them to model and solve practical problems. Moreover, in the tutorials, some experience will be gained to implement and numerically solve practical problems. | ||||||

| Registration: | ||||||

| The course is organized via Moodle. | ||||||

| Exam: | ||||||

| Oral or written exam depending on the number of students. | ||||||

| Documentation and Schedule: | ||||||

Participants of this lecture may download the course material directly from Moodle, including detailed lecture notes (script) and exercise sheets. Please note that the documentation that is provided is not meant to replace the attendance of the lectures and tutorials, that it may contain errors, and that it is not exclusively meant to define the contents of the exam.The table of contents of the lecture is as follows:

| ||||||

| Literature | ||||||

The lecture is based on the following literature, which is available via the library:

|

MOP Group

©2017-2025

The author is not

responsible for

the content of

external pages.

Saarland

Saarland