Welcome to the homepage of the

Mathematical Optimization for Data Science Group

Department of Mathematics and Computer Science, Saarland University, Germany

|

Unification of Non-smooth Optimization Algorithms |

|

We seek for algorithms that extend the modelling flexibility for practitioners, i.e., we want to solve new problem structures. In order to achieve this, we generalize existing algorithms to generic frameworks by reducing the convergence proofs to the their essential mechanisms. Besides the gain in flexibility for modeling and solving real world problems, this research yields deep insights into the understanding of algorithms and the connections between them.

Partially funded by the German Research Foundation (DFG Grant OC 150/1-1). | ||||

| Our Contribution: | ||||

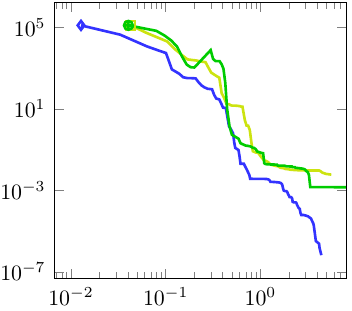

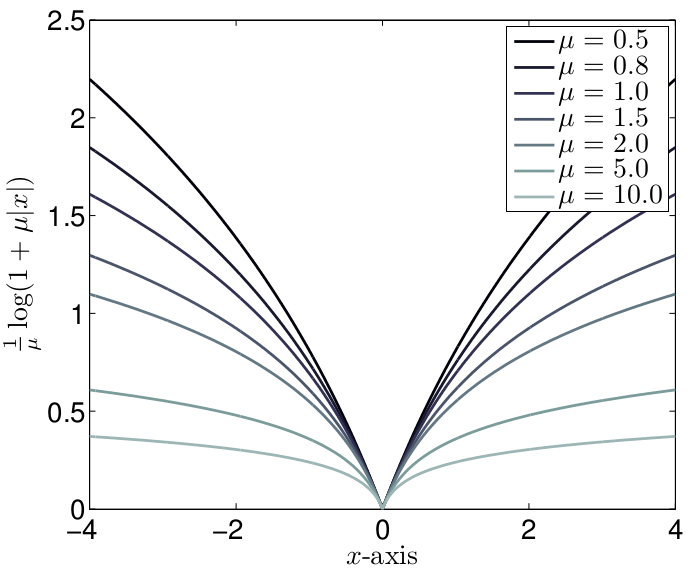

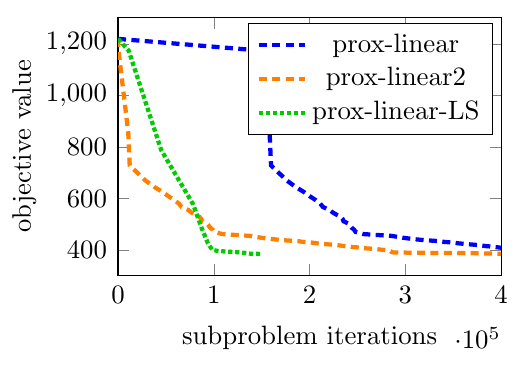

Many algorithms that have been studied recently can be unified in our "model function" framework. The idea is to sequentially minimize model functions that obey a certain approximation quality (approximation error) to the original objective. The minimization of the model function is complemented with a proximity measure, which avoids stepping far away from the previous iterate. We allow for very general proximity measures given by Bregman functions that are generated by Legendre functions. Moreover, the incorporated line-search strategy makes the algorithm efficient, widely applicable, and weakens the assumptions, e.g., for the Gradient Descent instance, we do not require the common (restrictive) Lipschitz continuity of the gradient. The developed framework does not only unify existing algorithms such as Proximal Gradient Descent (or Forward--Backward Splitting), ProxDescent [LW16] A. S. Lewis and S.J. Wright: A proximal method for composite minimization. Mathematical Programming 158(1-2):501--546, 2016. , or several majorization--minimization based optimization algorithms, the framework also provides convergence guarantees for problems that could not be solved by any other algorithm before. We prove subsequential convergence of the iterates generated by this model function algorithm to a stationary point. The second paper establishes global convergence of the full sequence of iterates in the framework of the Kurdyka-Lojasiewicz inequality (see also our research on inertial methods). In order to avoid line search, in this work the recent idea of relative smoothness (L-smad property) [BBT16] H.H. Bauschke, J. Bolte, and M. Teboulle. A descent lemma beyond Lipschitz gradient continuity: first-order methods revisited and applications. Mathematics of Operations Research, 42(2):330–348, 2016. , [BSTV18] J. Bolte, S. Sabach, M. Teboulle, and Y. Vaisbourd. First order methods beyond convexity and Lipschitz gradient continuity with applications to quadratic inverse problems. SIAM Journal on Optimization, 28(3):2131–2151, 2018. is adapted. | ||||

In the same line of research as [OFB19] above, we develop a model function framework that extends the classical Frank--Wolfe method (Conditional Gradient Descent). This generalization allows us to solve non-smooth non-convex optimization problems with a flexible design of the iteration oracle. Naturally, higher order schemes can be derived and interesting variants that combine the Frank--Wolfe oracle with respect to one block of coordinates and a proximal minimization oracle with respect to another block of coordinates are proposed. | ||||

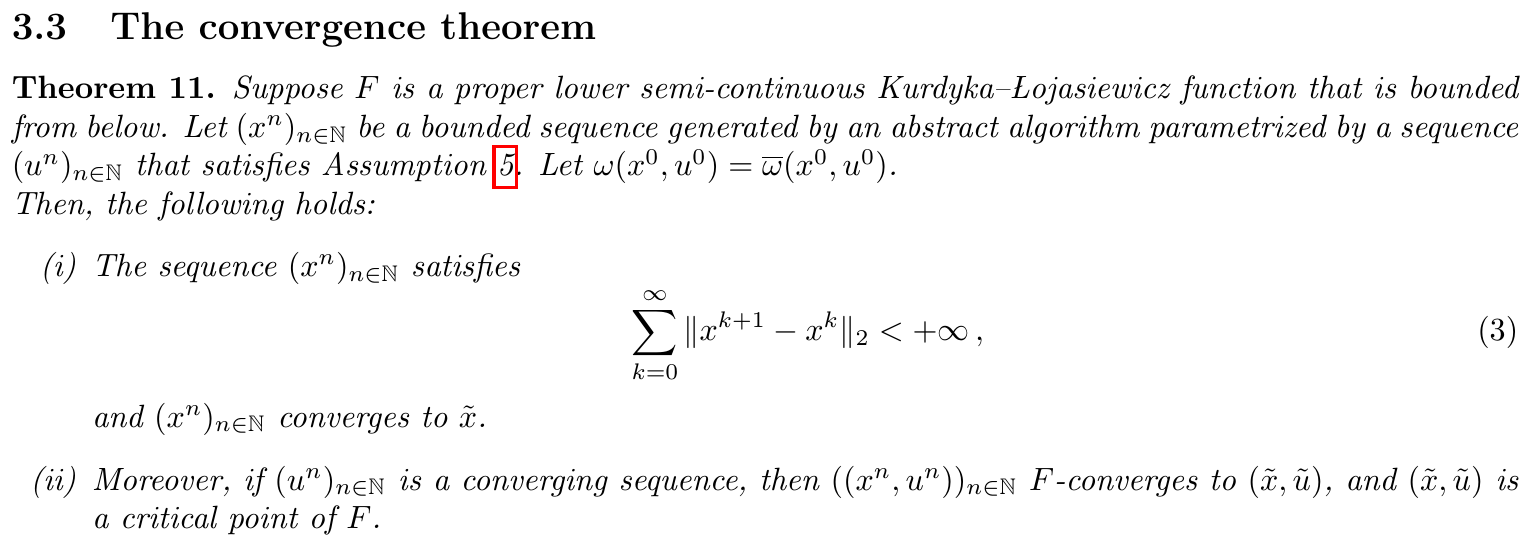

We provide a unifying perspective of inertial algorithms, which yields also block-coordinate descent and variable metric versions. The contribution is in the line of [ABS13] H. Attouch, J. Bolte, and B. F. Svaiter: Convergence of descent methods for semi-algebraic and tame problems: proximal algorithms, forward--backward splitting, and regularized Gauss--Seidel methods. Mathematical Programming 137(1-2):91--129, 2013. . They provide an abstract descent framework that implies convergence of the full sequence of iterates generated by an abstract algorithm to a stationary point. Key for this contribution is the so-called Kurdyka--Lojasiewicz inequality. Basically, the framework requires that the algorithm generates a sequence that satisfies a sufficient decrease condition, a relative error condition, and a continuity condition, which leads to a common analysis of several recent algorithms in non-smooth non-convex optimization. Several follow up papers have modified these three conditions to prove the same global convergence result, yielding new algorithms. Our contribution, unifies most of these modifications and proves global convergence for an abstract algorithm. While, originally, the sufficient decrease condition was imposed on the objective, we impose it on a Lyapunov-like (and also parametric) function, which is crucial for incorporating inertial algorithms. In particular, we propose a block coordinate variable metric version of iPiano. See also our research page on inertial or accelerated algorithms, which also presents algorithms for non-smooth non-convex optimization. | ||||

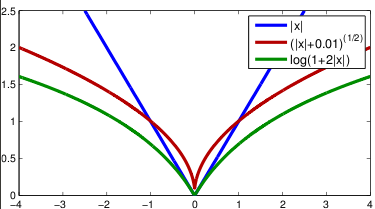

A robust and efficient algorithm was introduced to solve optimization problems in image processing and computer vision that require non-convex regularization. Before, due to the complexity, usually convex surrogates of these non-convex regularizers were used, though the usage of non-convex regularizers can be motivated naturally in various ways, including natural image statistics. Applications are image denoising, deconvolution, optical flow, depth map fusion, etc. The algorithm unifies iteratively reweighted algorithms (e.g. IRLS, IRL1) based on the strategy of majorization--minimization (MM). Motivated from the non-convex regularizer in image processing, we formulate an MM framework, which can be implemented efficiently using primal--dual schemes. |

MOP Group

©2017-2025

The author is not

responsible for

the content of

external pages.

Saarland

Saarland

![[pdf]](/images/pdf_14x14.png)