Welcome to the homepage of the

Mathematical Optimization for Data Science Group

Department of Mathematics and Computer Science, Saarland University, Germany

|

Non-smooth Analysis and Optimization in Data Science |

|

Lecturer: Peter Ochs Winter Term 2023 Lecture (4h) and Tutorial (2h) 9 ECTS Lecture: Tuesday 10-12 c.t. in HS003, E1.3 Thursday 10-12 c.t. in HS003, E1.3 First Lecture: 24. October, 2023 Teaching Assistants: Shida Wang and Tejas Natu Tutorials: by arrangement. Advanced Lecture for Mathematics and Computer Science Language: English Prerequisites: Basics of Mathematics (e.g. Linear Algebra 1-2, Analysis 1-3, Mathematics 1-3 for Computer Science) |

|

| ||||||

| News | ||||||

|

21.09.2023: Webpage is online. | ||||||

| Description | ||||||

|

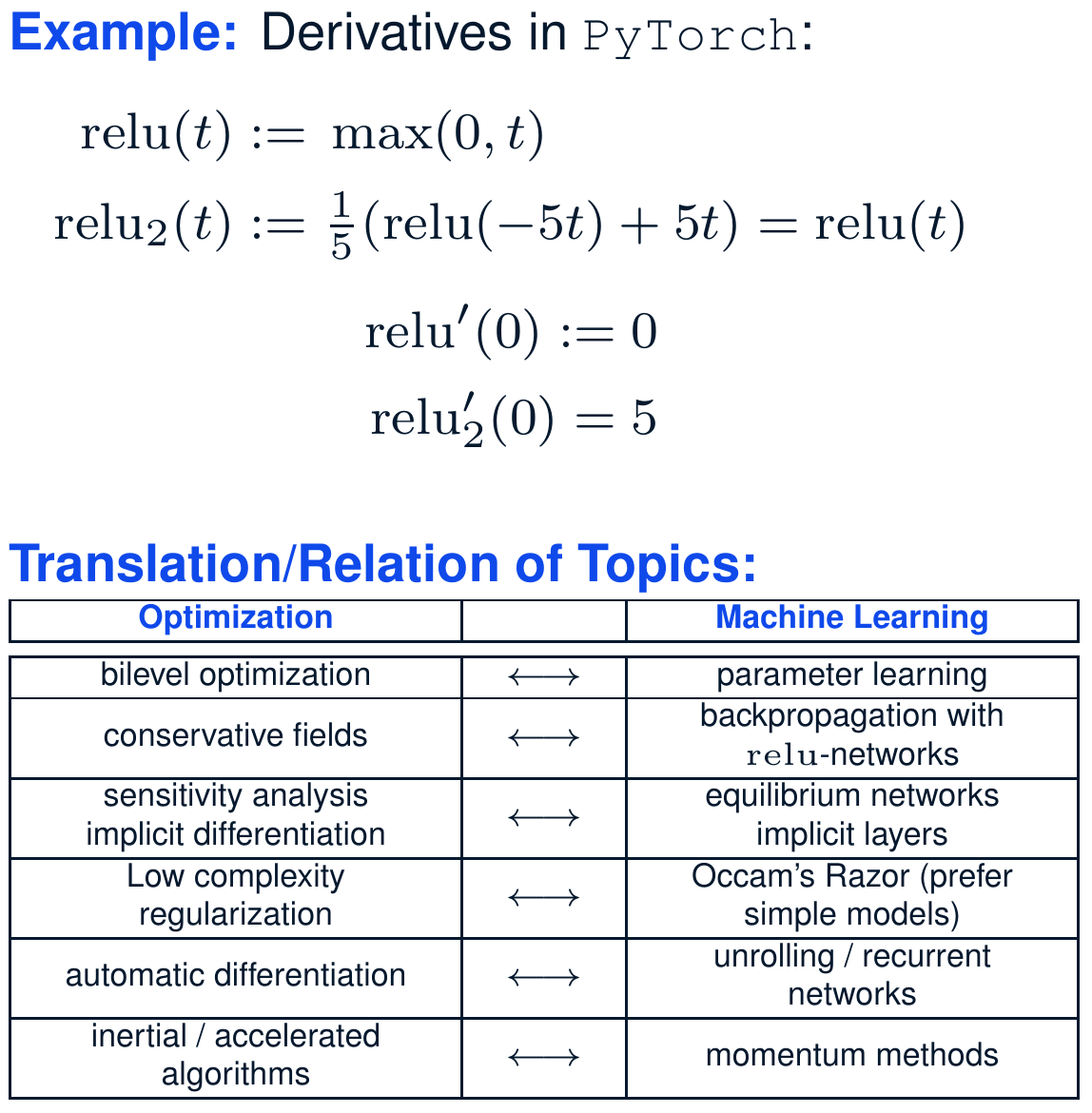

In many Data Science applications, non-smooth features arise naturally, e.g., in the analysis of sparsity, low rank structure, or low complexity regularization in general. Constrained optimization problems can be considered as non-smooth objective functions. Envelope functions, dual Lagrangian relaxations, and many other (algorithmically) important functions are naturally non-smooth. Thereby, non-smoothness is clearly not just an artifact, it is a feature that usually comes with a certain structure. The key is to recognize good structures that can be leveraged. Ignoring the non-smoothness leads to inaccurate solutions or slow algorithms.

The course consists of two parts: (1) basic and advanced concepts of non-smooth analysis and (2) optimization algorithms for non-smooth non-convex optimization that are widely used in non-convex optimization for data science (Machine Learning, Computer Vision, Image Processing, and Statistics, ...). The first milestone in Part 1 is the exploration of Fermat's Rule, a first order optimality condition for non-smooth functions. This requires us to introduce generalized derivatives and is a means to formulate commonly employed Lagrange multiplier rules or KKT conditions as a special case. In order to study algorithms in Part 2 to solve non-smooth non-convex optimization problems, we first introduce the class of semi-algebraic and tame functions that comprise all practically relevant functions, but exclude pathological function. This leads to insights into recent breakthroughs in non-smooth and non-convex optimization that yield strong convergence results to a stationary point, based on the so-called Kurdyka-Lojasiewicz inequality. Besides many classical applications, this lecture presents several research topics in optimization for machine learning, for example,

| ||||||

| Registration: | ||||||

| The coure will be organized via Moodle. | ||||||

| Exam: | ||||||

Oral or written exam depending on the number of students.

Qualification requirements:

| ||||||

| Documentation and Schedule: | ||||||

|

Participants of this lecture may download the course material directly from Moodle, including detailed lecture notes (script) and exercise sheets. Please note that the documentation that is provided is not meant to replace the attendance of the lectures and tutorials, that it may contain errors, and that it is not exclusively meant to define the contents of the exam. | ||||||

| Literature | ||||||

|

MOP Group

©2017-2025

The author is not

responsible for

the content of

external pages.

Saarland

Saarland